Communication with your teammates is probably one of the most important aspect of designing APIs. You need to first ensure that your are on the same page as to what features to deliver, to make it easy for them to discover and try your software, and secondly, to deliver stable services. API specifications are the key to success in the first area, and one of the most widely used format is OpenAPI specifications. This Gitops article shares a simple strategy to automate the writing and deployment process of OpenAPI specs, and making it easy for your team to communicate efficiently.

feel free to share this article or drop a message with your feedback!

If you are a french speaker and want to learn Kubernetes, I highly recommend Stéphane Robert's website.

Summary

In this article, we're going to:

- Give a gitlab runner the necessary permissions to deploy your app.

- Make a Dockerfile to ship your specs in a Swagger viewer.

- Make deployment files for your swagger deployment, service and ingress.

- Write a

.gitlab-ci.ymlfile that automates the versionning and deployment of the Swagger specs and viewer in Kubernetes.

You're going to end up with a repository where after each commit prefixed by feat: or fix:, the yaml

OpenAPI specs are automatically deployed to a web app where they can be accessed.

It presuppose you have:

- A Kubernetes cluster with a Traefik ingress controller, eventually thanks to this tutorial. It should be easy to adapt it to a nginx ingress controller.

- A Gitlab Runner setup, eventually thanks to this tutorial.

We will expose a web app through the ingress. If your ingress faces the internet and is not behind a demilitarized zone or on a local network, your specifications will be publicly accessible.

Create a gitlab repository

Let's practice with this sample OpenAPI file:

openapi: 3.0.0

info:

title: Sample API

description: Optional multiline or single-line description in [CommonMark](http://commonmark.org/help/) or HTML.

version: 0.1.9

servers:

- url: http://api.example.com/v1

description: Optional server description, e.g. Main (production) server

- url: http://staging-api.example.com

description: Optional server description, e.g. Internal staging server for testing

paths:

/users:

get:

summary: Returns a list of users.

description: Optional extended description in CommonMark or HTML.

responses:

"200": # status code

description: A JSON array of user names

content:

application/json:

schema:

type: array

items:

type: string

We can create a local repository to store it:

mkdir my-auto-specs

cd my-auto-specs

git init

vim specs.yaml # add the file content here

git add specs.yaml

git commit -m "feat: initial commit"

We then need to create a repository at gitlab, and to add it as the origin (replace with your repo url):

git remote add origin git@gitlab.com:Faidide/automated-swagger.git

git push origin master

Make sure to uncheck the Create an empty README.md file box in the Gitlab repo creation page if you're using the exact same commands.

(Optional) Ensure your Gitlab Runner is used

You can start by commiting a dummy .gitlab-ci.yml to your repository.

stages:

- hello_world

hello_world:

image: node:17

stage: hello_world

script:

- echo "Hello World"

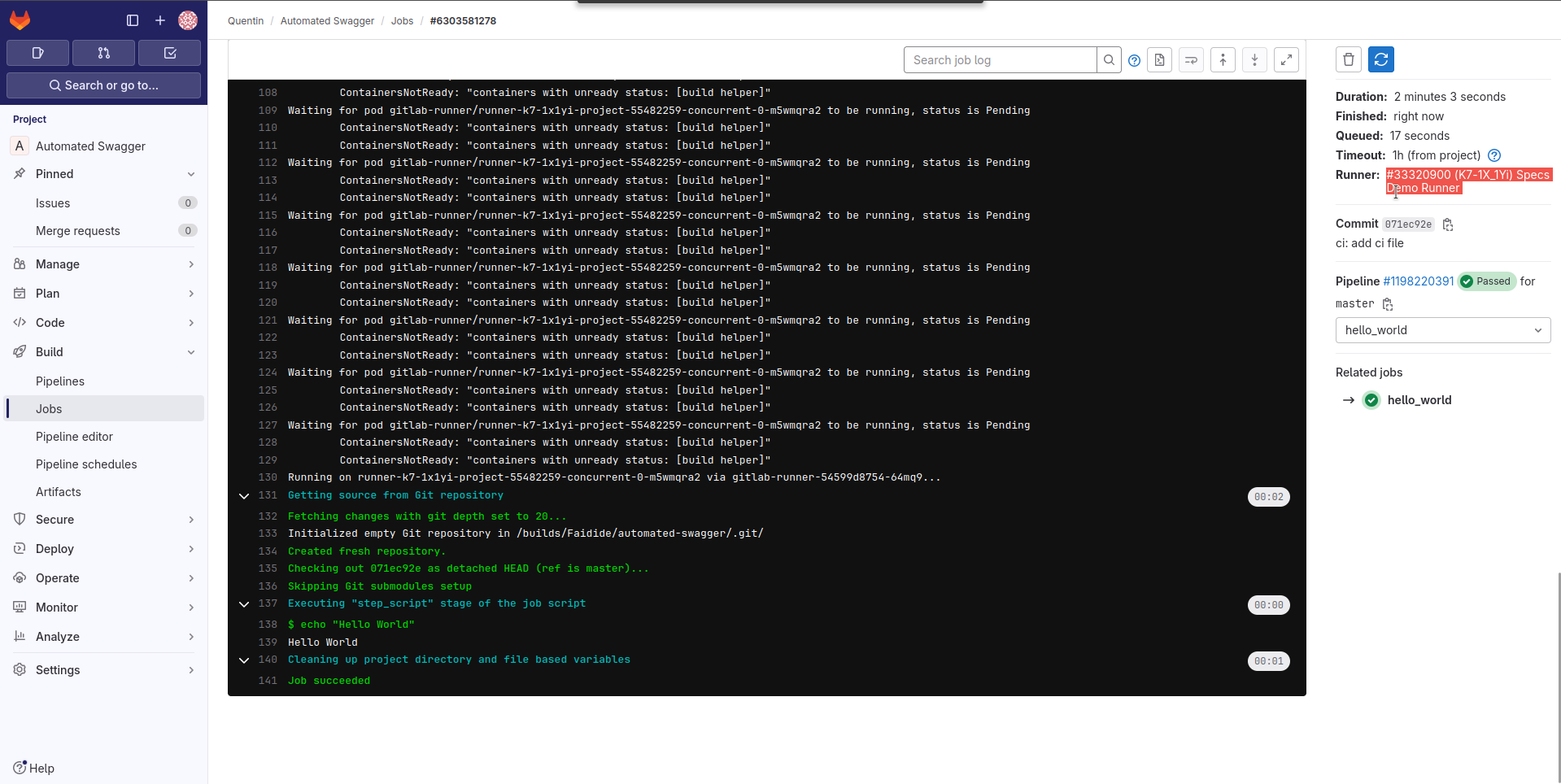

Then by clicking the green checkmark for the job, followed by the one named Hello World, you should be able able to see your runner name to the right as pic related.

If you have a gitlab runner for your project but the shared runner are still used, you might

want to uncheck the Enable instance runners for this project checkbox in the Runner section of the

CI/CD settings page of your project.

Setup Semantic Versionning

How it works

Semantic Versionning will automatically add a tag to your reposities based on the

commit message prefix (feat:, fix:, or BREAKING CHANGE). See this website

for more informations.

There are many types of CI pipelines in Gitlab, and the two we are interested in are:

commit pipelines: the pipelines triggered after something was commited to the repo.tag pipelines: the pipelines triggered after a tag has been added to the repo.

Gitlab allow us the assign the stages to pipeline types, and the semantic version plugin can create tags from commit pipelines. We plan on creating new version tags on commits to later build containers and deploy them inside the tags pipelines, the tag being the new version name.

We could have very well tagged the swagger specs viewer container image with the commit hash and used only commit pipelines. I do have a preference for the semantic versioning pattern though as it is more informative.

Create a releaserc file

Commit the following file as .releaserc.yml to your repository:

plugins:

- "@semantic-release/commit-analyzer"

- "@semantic-release/release-notes-generator"

- "@semantic-release/gitlab"

branches:

- "master"

- "+([0-9])?(.{+([0-9]),x}).x"

- name: "alpha"

prerelease: "alpha"

Add the GITLAB TOKEN

Create a personnal access token, or a project access token if you have your own

gitlab or you have the paid version.

Then go to the repository (or group) CI settings, and add it as a CI variable named GITLAB_TOKEN.

Create the CI step for versionning

Edit the .gitlab-ci.yml file to be like the following:

stages:

- versionning

release:

# If you don't set latest here, and you didn't

# specify a semantic release version, your pipelines

# will regularly break.

image: node:latest

stage: versionning

# only run on master branch

only:

refs:

- master

script:

- npm install @semantic-release/gitlab

- npx semantic-release

# do not run on tag pipelines

except:

refs:

- tags

What happens here is that we use npm to install and use the semantic release project that will parse your commits, eventually, create a new version tag, and we will then act on the tags in the following section.

Building containers on new tags

Writing the Dockerfile

Create a Dockerfile in the repository, and eventually replace the version with a more recent one:

FROM docker.io/swaggerapi/swagger-ui:v5.11.8

ENV SWAGGER_JSON "/app/specs.yaml"

ENV PORT 80

COPY specs.yaml /app/specs.yaml

If you don't want the swagger to live inside its own subdomain, you will need to also set ENV BASE_URL "/documentation/api-specifications"in the Dockerfile, as well as modify the ingress resource we create later in this tutorial.

Adding the CI build step

We will use podman to build our image, this may be done the following way:

build_image:

stage: build_image

# podman will regularly prune old images, using latest is the safest way

image: quay.io/podman/stable:latest

script:

- podman login -u $CI_REGISTRY_USER -p $CI_REGISTRY_PASSWORD $CI_REGISTRY

- podman build -t ${CI_REGISTRY_IMAGE}:${CI_COMMIT_TAG} -t ${CI_REGISTRY_IMAGE}:latest .

- podman push ${CI_REGISTRY_IMAGE}:${CI_COMMIT_TAG}

- podman push ${CI_REGISTRY_IMAGE}:latest

only:

- tags

Don't forget to add this new step to the stages list at the top of the file:

stages:

- versionning

- build_image

Ensure your images are building on new version

Make a commit with everything whose message has a semantic versionning prefix, like

feat: add Docker building step. It might not strictly be a feature, and would normally

deserver the chore: or ci: prefixes, but it will ensure a new tag is created.

In the pipeline page https://gitlab.com/Faidide/automated-swagger/-/pipelines, that can be opened

though clicking Build/Pipelines in the left sidebar, you should have see a pipeline with

a unique release step, followed by, after it completes, a tag pipeline (where the version tag appears)

and with a unique container building building step.

The first time these pipeline runs, you will need to wait a little bit for the image to download before they start. Don't panick if you get these messages:

Waiting for pod gitlab-runner/runner-k7-1x1yi-project-55482259-concurrent-0-ih8h19cj to be running, status is Pending

If the image pull takes too long, the job will time out, don't panick and relaunch it from the gitlab UI in that eventually.

If the issue persist, you can always double check with k9s, by going in the gitlab namespace, and ensuring that when describing the Pending ci pods you effectively get the event of Image pulling at the bottom of the description.

Automate deployments

We now have the image automatically building on each tag, and we now want to deploy it after the image was built. For this, we will need to write the deployment files, to ensure the gitlab runner has permission to deploy, and to let instruct it to deploy the right version.

Setup registry credentials

In order for Kubernetes to pull your docker images from Gitlab's container registry, you need to give it registry crendentials as a Kubernetes secret.

First create a deploy token in Gitlab Repository settings page. It needs the

read_registry permissions. You should get a username and a password, that you

would then use the following way:

kubectl create secret docker-registry specs-credentials --docker-server=https://registry.gitlab.com --docker-username=USERNAME --docker-password=PASSWORD

We create the secret for this specific repository, and put it in the default namespace,

but it's quite common to create the deploy token in your company Gitlab group, and you need

to create the secret in all namespaces where you deploy images (relaunch this command with -n mynamespace).

Deployment files

We create a deployment, a service, and an ingress resource in the default Kubernetes namespace.

Create a deployment.yaml file with the following content (don't try to apply it):

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: specs-service

namespace: default

annotations:

traefik.ingress.kubernetes.io/frontend-entry-points: "https"

traefik.ingress.kubernetes.io/redirect-entry-point: https

traefik.ingress.kubernetes.io/redirect-permanent: "true"

spec:

ingressClassName: traefik

# replace with your host and tls secret name, these are the one from my vagrant Kubernetes deployment tutorial

tls:

- hosts:

- specs.demo-cluster.io

secretName: wildcard-tls-cert

rules:

- host: specs.demo-cluster.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: specs-service-svc

port:

number: 80

---

kind: Service

apiVersion: v1

metadata:

name: specs-service-svc

namespace: default

spec:

ports:

- name: app

protocol: TCP

port: 80

targetPort: 80

selector:

serviceName: specs-service

type: ClusterIP

---

kind: Deployment

apiVersion: apps/v1

metadata:

name: specs-service

namespace: default

spec:

replicas: 1

selector:

matchLabels:

serviceName: specs-service

template:

metadata:

labels:

serviceName: specs-service

spec:

containers:

- name: specs-service

image: registry.gitlab.com/faidide/automated-swagger:VERSION_TO_REPLACE

ports:

- name: app

containerPort: 80

protocol: TCP

imagePullSecrets:

- name: specs-credentials

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 25%

maxSurge: 25%

Make sure to replace your repository name, and eventually tune the annotations

if you won't use tls or rely on the nginx ingress controller. Note that the version

tag is VERSION_TO_REPLACE, which we will sed from the CI to the tag pipeline tag in the next section.

Create a DNS entry for the subdomain

We used the subdomain specs.demo-cluster.io in the Ingress, which requires a matching

DNS entry. if you have followed my previous tutorials for local kubernetes in Vagrant,

you can simply add another /etc/hosts entry:

192.168.56.10 specs.demo-cluster.io

Deployment CI Stage

You can now add the following step to the .gitlab-ci.yml file:

deploy:

image: alpine:3.19.1

stage: deploy

script:

# install kubectl

- apk add --no-cache curl

- curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.29.2/bin/linux/amd64/kubectl

- chmod +x ./kubectl

# replace version number in deployment file

- sed -i "s/VERSION_TO_REPLACE/$CI_COMMIT_TAG/g" deployment.yaml

# make sure swagger is deployed

- ./kubectl apply -f deployment.yaml

only:

- tags

Make sure to update the version if this article gets old and to add the step to the steps at the top of the gitlab ci file. The kubectl command should then default to the pod service account permissions, which we need to tune.

Getting the gitlab runner the necessary permissions to deploy

If you push the previous deployment stage with a feature or fix tag, you will probably get the following error:

$ ./kubectl apply -f deployment.yaml

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "networking.k8s.io/v1, Resource=ingresses", GroupVersionKind: "networking.k8s.io/v1, Kind=Ingress"

Name: "specs-service", Namespace: "default"

from server for: "deployment.yaml": ingresses.networking.k8s.io "specs-service" is forbidden: User "system:serviceaccount:gitlab-runner:default" cannot get resource "ingresses" in API group "networking.k8s.io" in the namespace "default"

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "/v1, Resource=services", GroupVersionKind: "/v1, Kind=Service"

Name: "specs-service-svc", Namespace: "default"

from server for: "deployment.yaml": services "specs-service-svc" is forbidden: User "system:serviceaccount:gitlab-runner:default" cannot get resource "services" in API group "" in the namespace "default"

Error from server (Forbidden): error when retrieving current configuration of:

Resource: "apps/v1, Resource=deployments", GroupVersionKind: "apps/v1, Kind=Deployment"

Name: "specs-service", Namespace: "default"

from server for: "deployment.yaml": deployments.apps "specs-service" is forbidden: User "system:serviceaccount:gitlab-runner:default" cannot get resource "deployments" in API group "apps" in the namespace "default"

This is because the Gitlab Runner permissions in Kubernetes default to the ones of the service account. You therefore need to either have the Gitlab Runner or job use another service account, or modify the default service account for the gitlab namespace. The gitlab documentation specifies how create and assign a service account to runner, but we are going to directly assign the namespace service account the permissions we need.

Apply the following manifests to create a ClusterRole and assign it to our service account:

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: gitlab-runner-role

rules:

- apiGroups: ["networking.k8s.io"]

resources: ["ingresses"]

verbs: ["get", "list", "watch", "create", "patch"]

- apiGroups: [""]

resources: ["services"]

verbs: ["get", "list", "watch", "create", "patch"]

- apiGroups: ["apps"]

resources: ["deployments"]

verbs: ["get", "list", "watch", "create", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: gitlab-runner-role-binding

subjects:

- kind: ServiceAccount

name: default

namespace: gitlab-runner

roleRef:

kind: ClusterRole

name: gitlab-runner-role

apiGroup: rbac.authorization.k8s.io

By doing this, you are creating an attack vector: if your gitlab account gets comprimised, an attacker will obtain these permissions inside your cluster. If you're ever doing this in production, ensure to give minimal permissions, and maybe use a role rather than a cluster role. You should also ensure that all people with write permission to your repo protected branches have 2FA enabled, and that the team in charge of cybersecurity is aware of this and doesn't have a prefered alternative.

Conclusion

Voilà ! You should now be able to access https://specs.demo-cluster.io/ and the swagger viewer.

Everytime a commit is made to the master branch with a message that starts with feat: or fix:,

the website will update with the latest specs.

Spread the word

If you liked this article, feel free to share it on LinkedIn, send it to your friends, or review it. It really make it worth my time to have a larger audience, and it encourages me to share more tips and tricks. You are also welcome to report any error, share your feedback or drop a message to say hi!