This article explains how to run a Kubernetes node in a Vagrant box, and how to setup a self signed wildcard SSL certificate at cluster level for encrypted connections with the HTTP(s) APIs.

feel free to share this article or drop a message with your feedback!

If you are a french speaker and want to learn Kubernetes, I highly recommend Stéphane Robert's website.

Summary

For the sake of writing this articles, we're going to deploy a Kubernetes node in Vagrant. We will then give it a hostname and ensure SSL is properly set up.

Do not expose your Kubernetes node to your internet facing network interfaces if you're not sure of what you're doing. It's better to have it behind a demilitarized zone. It's easy to have a Helm Chart, or yourself, exposing ports or services that shouldn't be.

Start a Vagrant VM

Create a folder with the following Vagrantfile to configure a debian VM (you can tune the virtual disk size):

Vagrant.configure("2") do |config|

config.vm.box = "ubuntu/jammy64"

config.vm.network "private_network", ip: "192.168.56.15"

config.vm.disk :disk, size: "50GB", primary: true

config.vm.synced_folder ".", "/vagrant", disabled: true

# tune this as necessary

config.vm.provider "virtualbox" do |v|

v.memory = 2048

v.cpus = 2

end

end

This will deploy a virtual machine and give it an IP. To download the image and start the server along an ssh session, you can type:

VAGRANT_EXPERIMENTAL="disks" vagrant up

vagrant ssh

Install k3s

K3s is lightweight Kubernetes distribution that makes it easy to deploy Kubernetes nodes, including on IOT and other ARM-based devices. It comes with Traefik, the reverse proxy that allow us to expose HTTPs APIs.

All commands on the guest VM are executed as root.

A single command can deploy k3s as a systemd service. Refer to the documentation for more advanced options.

sudo su

curl -sfL https://get.k3s.io | sh -s - --cluster-domain demo-cluster.io --tls-san demo-cluster.io --node-name demo-node-1

You should after a while be able to do the following to list the nodes in your single node cluster:

[root@localhost vagrant]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

demo-node-1 Ready control-plane,master 2m12s v1.28.7+k3s1

If kubectl keeps failing to connect, you can check the logs with journalctl -fe -u k3s. There are likely

indications on the issue there.

Give your VM a hostname

To easilly access the server, you can give it a hostname that matches the cluster domain we gave.

This can be done by adding the following entry to the /etc/hosts file of your host machine:

192.168.56.10 demo-cluster.io

You can also install a DNS server if you're willing to make the nodes easilly accessible to computers on your network (that you can configure to use your DNS).

Setup your host machine kubectl

Kubectl is a command line interface for managing (create, edit, delete, describe) Kubernetes resources. K9s is a convenient text based user interface for managing a Kubernetes cluster resources. You can also install Lens if you would prefer a graphical user interface.

You can download the kubectl file from the guest VM in /etc/rancher/k3s/k3s.yaml to your host machine (I personally cat it and copy/paste the content),

and edit it to replace 127.0.0.1 with the hostname you gave to the guest VM (tip: sed -i "s/127.0.0.1/demo-cluster.io/g" k3s.yaml).

Then after exporting the KUBECONFIG environement variable to point to your edited file, you should be able to use

your host machine kubectl and k9s to access the cluster in the guest VM:

# this is necessary for kubectl to find your cluster

export KUBECONFIG="k3s.yaml"

# this is to assert that kubectl is working

kubectl get nodes

Create and install certificates

SSL certificates are used to encrypt HTTP traffic with TLS and prevent a number of man-in-the-middle attacks (example: ARP Spoofing) that used to be common before HTTPS became standard. On top of encrypted traffic, SSL certificates are signed by a certificate authority that the operation system has to trust. What we do in this section is create a certificate authority, use it to sign a certficate for all our cluster subdomain, and add it to the trust store system-wide.

Generate a root certificate

This is the certificate authority that will sign the certificate and will need to be installed on all machines interracting with our cluster.

openssl req -x509 -newkey rsa:4096 -sha256 -nodes -keyout RootCA.key -out RootCA.crt -subj "/CN=Root CA"

Default expiracy time is one month, you might want to set a specific duration in days with the option -days 365, replacing 365 with your desired certificate.

Create a configuration file

You need to create a file that will hold the certificate request main configuration.

I decided to name it req.conf and it contains the following:

[req]

req_extensions = v3_req

distinguished_name = req_distinguished_name

[req_distinguished_name]

[v3_req]

basicConstraints = CA:FALSE

keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment

subjectAltName = @alt_names

[alt_names]

DNS.1 = *.demo-cluster.io

Generate and sign the certificate

You will then generate the certificate signing request, and sign it with the Root CA.

openssl req -new -nodes -newkey rsa:2048 -keyout server.key -out server.csr -subj "/CN=*.demo-cluster.io" -config req.conf

openssl x509 -req -in server.csr -CA RootCA.crt -CAkey RootCA.key -CAcreateserial -out server.crt -days 365 -sha256 -extfile req.conf -extensions v3_req

Create the kubernetes secret

We can finally pass the certificate (private and public key) onto the Traefik ingress in Kubernetes by creating a secret. If you want to use different namespaces in the future, you will need to create the secret there as well.

kubectl create secret generic certif-secret --from-file=tls.crt=./server.crt --from-file=tls.key=./server.key --namespace default

Install the RootCA on your host maching

After doing that, the root certificate authority will be trusted and the certificate we signed will be too. How to install/trust a certificate depends on your operating system. For example, for arch and Fedora, the following works:

sudo trust anchor --store RootCA.crt

If you're out of option, you might alternatively get the openssl directory with this command:

openssl version -d

Then place yours under the certs subdirectory. But I encourage you not to do it and to

use your distro default cert folder and cert updating command.

Create a nginx deployment to test out HTTPS

If you're not familiar with Kubernetes:

- A Pod is equivalent to a deployed container plus eventual sidecars (example: a container running an API, and a container exposing the API monitoring metrics living in the same pod). Most of the time, there's only one container per pod.

- A Deployment is a template for a set of replicated Pods that get the same labels. If a pod fails or complete, it is recreated. Deployments "creates" Pods (but are not the only resource that can do that).

- A Service is a stable interface for reaching Pods based on their labels.

- An Ingress is a resource that expose a service through an HTTP(s) reverse proxy.

- Helm is a templating system to create all kind of Kubernetes resource through Helm Charts, generally deploying a specific stack (like a Webapp with databases and volumes).

- Resources in Kubernetes are dynamic and very often you can install Controllers that define new resources (like Traefik which define Ingresses).

You need to create a deployment using the nginx image, and a service in front of this deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

And applying it:

kubectl apply -f nginx.yaml

Create the ingress with a subdomain

The ingress is the final resource that will expose

the service through HTTPS, save this as ingress.yaml:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

namespace: default

annotations:

kubernetes.io/spec.ingressClassName: traefik

traefik.ingress.kubernetes.io/frontend-entry-points: "https"

traefik.ingress.kubernetes.io/redirect-entry-point: https

traefik.ingress.kubernetes.io/redirect-permanent: "true"

traefik.ingress.kubernetes.io/router.tls: "true"

traefik.ingress.kubernetes.io/router.entrypoints: websecure

spec:

tls:

- hosts:

- nginx.demo-cluster.io

secretName: certif-secret

rules:

- host: nginx.demo-cluster.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-service

port:

number: 80

Before applying it to the cluster:

kubectl apply -f ingress.yaml

After adding the nginx subdomain to your host /etc/hosts:

192.168.56.10 nginx.demo-cluster.io

Redirect HTTP to HTTPS, and access the app

On the guest VM, edit /var/lib/rancher/k3s/server/manifests/traefik-config.yaml:

apiVersion: helm.cattle.io/v1

kind: HelmChartConfig

metadata:

name: traefik

namespace: kube-system

spec:

valuesContent: |-

ports:

web:

redirectTo:

port: websecure

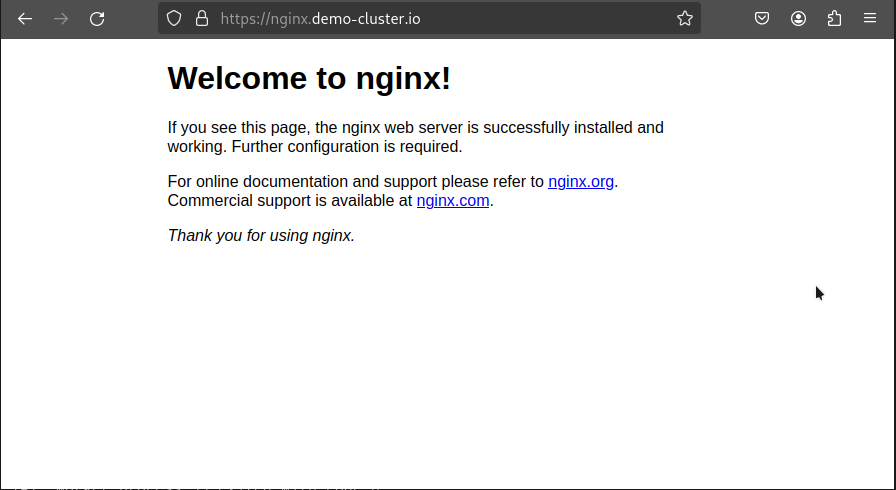

Finally, reboot the guest VM. You should now be able to access nginx.demo-cluster.io.

Ensure you are indeed using HTTPS and not HTTP. If you are using HTTP, the previous step likely failed.

Interesting apps to deploy

Now that you have a Kubernetes cluster up and running, you have access to many interesting deployments and helm charts. I personally enjoy the following:

- Focalboard: A simple and efficient task board.

- Mediawiki: A wiki.

- Pyroscope: For live software and server flame graphs.

- Uptrace: For tracing and monitoring HTTP APIs through OpenTelemetry.

- Prometheus: The monitoring stack the world runs on. Pairs well with Grafana.

- Jupyter Lab: For your Julia/Python/R notebooks. Convenient for data science.

- RStudio Server: An environnement for statistics and data science with R.

- CryptPad: A self deployed Google-Drive like webapp with advanced encryption capabilities (and even task boards).

- Minio: To host your S3 Buckets and files

- Gitlab and Gitlab Runners: Git laboratory, container registry, and many more. You can also only install the runner to power your

gitlab.comCI pipeline. - Numaflow: Numaflow is a Kubernetes-native tool for running massively parallel stream processing. A Numaflow Pipeline is implemented as a Kubernetes custom resource and consists of one or more source, data processing, and sink vertices.

- Spark: A distributed computing framework.

Spread the word

If you liked this article, feel free to share it on LinkedIn, send it to your friends, or review it. It really make it worth my time to have a larger audience, and it encourages me to share more tips and tricks. You are also welcome to report any error, share your feedback or drop a message to say hi!